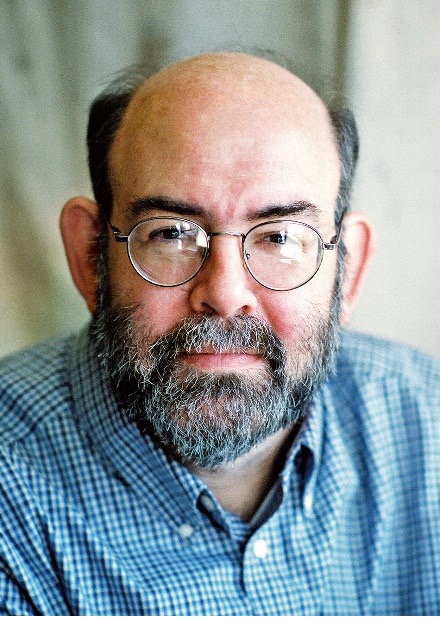

Editor’s Note: This is second piece on Artificial Intelligence by Marcus. Click here to see the first one.

In late February 1975, I saw a notice taped to an elevator wall at MIT where I was a graduate student at the Artificial Intelligence (AI) Lab. It read, more or less, “Special Meeting: David Baltimore will discuss the moratorium on recombinant DNA research agreed upon at the Asilomar conference last week,” and gave a time and place for the meeting. At this conference, now historic, the early leaders in gene splicing all agreed to completely stop their research until techniques could be developed that would guarantee with very high probability that no mistakenly created new pathogen could survive in the wild. I felt a need to go. When I arrived, I was amazed that a third of the people in the room were also from the AI Lab. Evidently, each of us was aware that we too might need to put the brakes on our own research someday.

This became real for me only a decade later when President Ronald Reagan launched the Strategic Defense Initiative, popularly called “Star Wars,” to detect and defend against a nuclear attack from the then Soviet Union. This system would include AI-based computer systems that could autonomously launch a counterattack to avoid the delay of human decision-making. At the time, I was one of a few AI researchers at Bell Labs, and I found myself answering phone calls that started with “Can you help me with an AI problem? Say we have heat sensors that detect a missile launch … ” I would apologize, and then say that I wouldn’t help. My response followed from a moral realization from a course in Just War Theory I took as an undergrad — a human being must be in the causal chain that takes the life of a human being, even during wartime. This means that taking the life of a human being by any completely mechanical chain of events is not morally allowed — that a person must be an agent in the chain of events leading to the death.

A careful examination of the deployment of AI systems is badly overdue. Is it time for a temporary ban, like the Asilomar ban, until we are sure we can mitigate the harm that AI might cause? Is a less onerous course of action called for? And who should decide? Here we have a serious problem: AI researchers (myself included) and AI system developers have the best understanding of what these systems can do now and are likely to do in the near future, but as a field, we have failed to move responsibly. It’s obvious that both researchers and developers have a serious conflict of interest. But informed decision-making requires a deep technical knowledge that the very few non-specialists on daily news shows possess. A minimum obligation of AI researchers is for us to strive to educate those around us to understand the technology well enough to make informed judgements. My own belief is that a rigorous, mandatory evaluation of new AI systems needs to be put into place immediately in a process that brings technologists together with sophisticated non-technologists.

Why do I think so?

First of all, is it likely that AI poses a threat to human existence, as some leaders of well-known AI companies have argued recently? Is the successor to ChatGPT likely to take over the world? I strongly doubt it. First of all, as I argued in my recent essay for Evolve, ChatGPT is far less capable than its developers would have us believe. Second, the arguments for the “singularity,” where a single computer was expected to become more intellectually capable than all of humanity by 2045, are based on the remarkable doubling of speed of computers every two years or the so-called “Moore’s Law.”[i] This observation held steady year after year from the invention of the computer until about 15 years ago; the computer I’m typing this on is about 10,000 times faster than the computers I used when I first learned to program but not much faster than the computer I owned five years ago. We are still learning to pack more and more transistors on a single chip, although far more slowly than before. Individual computers stopped getting faster about 2005 as the individual transistors within a computer became so tiny that the radically different physics of the super small (“quantum tunnelling”) took over.

These notions of computer dominance often assume that the brain is somehow entirely self-organizing — that you just feed in lots of information and the computer figures out how to organize itself to process different modalities, for example. But we know better. The human brain, while plastic, has many areas highly organized at birth to process various specific kinds of information; for example, there is good evidence that a very specific area of the human brain is hard wired to recognize exactly and only human faces.[ii] I don’t think it’s a coincidence that the companies that depend the most on the success of these new AI models argued for their existential danger recently just as the (perhaps) surprising weaknesses of these models began to enter the popular press.

But this does not mean that the rapid development of AI doesn’t present real dangers, even if at a smaller scale. Because AI is now all around us, sometimes visibly but often not. Applications now commonplace include interactive dialogue systems such as Apple’s Siri and Amazon’s Alexa, translation systems like Google Translate, and aids to driving that detect out of lane driving and near collisions. Word processors like Microsoft Word routinely detect not only misspellings, but also ungrammatical word uses and redundant phrases.

But AI applications also now silently affect many aspects of our lives in ways we don’t notice. About 50% of the email we are sent every day is spam; AI systems do an excellent job of filtering almost all of it. For decades now, the Post Office has used AI-based handwritten character recognition to automatically route letters by ZIP code. These invisible systems often profoundly impact our lives. Machine Learning systems are used behind the scenes to evaluate both loan applications and job applications; they often perform initial screenings completely autonomously. This is highly problematic: these systems are trained using machine learning methods to reject job applicants and loan applications that are similar to those rejected by humans in the past, including those rejected for reasons of racial or other bias. Even more perniciously, very similar machine learning systems have been adopted with little fanfare within the court systems to determine whether someone accused of a crime should be offered bail or kept in jail and in determining length of sentence in case of convictions. Often, these “recommendations” are applied without scrutiny.

Such an AI system, often called an “algorithm” in the press, functions as a classifier; it takes in some computer-readable representation of a person, object or event, and classifies this input into one of a predetermined set of categories (say, low loan risk/acceptable loan risk/high loan risk). The trick of a machine learning system is that given only a large pre-existing collection of representations each paired with the category it was assigned by a person (collectively called the training data), the machine learner will hand back a computer classifier that will do a pretty good job of replicating the categorizations earlier assigned to similar cases. So most of the time, if such an algorithm is fed information about a new case, it will assign the same category that a person would have assigned given the same information.

There are tremendous dangers in the naïve use of machine learning systems to make life-altering decisions about human lives or even as adjuncts to humans making such decisions. First is the problem known to computer scientists as “garbage in, garbage out.” The algorithm blindly replicates the decisions in its training data, even if these were biased decisions. If there was a serious racial bias in the human decisions, for example, the system’s categorizations will unfailingly replicate this bias. Removing information that correlates with race won’t make much difference in many cases—these algorithms can find very subtle patterns in the data if such a pattern helps to correctly predict the categorizations in the original training data.

Second, since the algorithm is typically trained to maximize correct answers overall, “correct” answers are answers similar to those given in the training data. In simple terms, this means that if the algorithm is tested on new cases just like the training data, it gets a very high “percent correct.” But this training criterion may well cause it to uniformly give wrong answers for a distinct subpopulation with different characteristics than the rest of the population, for example a small ethnic group of émigrés. If we know about the subpopulation in advance, we can add information to the data so that this can be discovered, but such subpopulations are often only discovered after the fact.

But beyond any technical failures, there is an ethical imperative, in my view: No decision of any import at all about a human being should be made mechanically, without human responsibility. If these systems could provide a proposed categorization along with an explanation of why a category was assigned, perhaps a person could evaluate the proposed category. But these systems currently function as black boxes and can provide no account of why a category was assigned.

No decision of any import at all about a human being should be made mechanically, without human responsibility.

Another threat: The near-term likelihood of AI putting large numbers of people out of work in many different areas is now apparent. While technology has frequently destroyed whole classes of jobs — there were more than 350,000 telephone operators 70 years ago, for example, and almost none today with the advent of electronic switching — these changes have taken decades. AI has the potential to eliminate vast numbers of jobs almost overnight, without the time lag needed for massive retraining of workers to move into other fields. The Jewish tradition is clear that this is wrong. Medieval rabbinic courts would forbid an individual, a kosher butcher for example, from moving into a town if that town could not support two kosher butchers. They understood that the monopoly held by the first butcher was a problem for obvious reasons, but making sure that people had a sustainable living ranked above market efficiency.[iii] By this analogy, we should forbid huge numbers of robot “truck drivers” to suddenly move to town if they will put the existing group of drivers out of business.

The use of AI on the battlefield in autonomous weapons is perhaps the most frightening near-term application of this technology — building weapons immediately that would fully autonomously seek out and destroy objects and people that met certain observable characteristics is easily possible. A strong argument against such systems follows from the principle stated above — that no decision about a human being of any import should be made without human responsibility. This was formalized within Just War theory, developed with the Catholic Church originally and developed further recently, most notably by the Jewish political philosopher Michael Walzer in his book “Just and Unjust Wars.” A surprisingly relevant case, taught in an undergrad class I took years ago but from hundreds of years earlier, was brought within Catholic canon law, which asked whether a farmer could stop ongoing theft from a chicken coop by rigging a tripwire to a crossbow so that the crossbow would fire if someone tried to enter the chicken coop. The answer was absolutely not; human judgment must be directly in the chain leading to the taking of human life.

The use of AI on the battlefield in autonomous weapons is perhaps the most frightening near-term application of this technology.

But perhaps, we now might ask, what if a robot could be given the ability to do moral reasoning, although we have absolutely no idea how to do this currently? Here, a recent Jewish source strongly answers “No.” A recent responsum of the Conservative movement authored by Rabbi Daniel Nevins unambiguously states, “We must not offer authority to a non-human actor to differentiate among human targets and decide who to kill.” It further states, “Autonomous weapons systems must not use lethal force against humans without human direction.”[iv] I note that here the community of AI researchers has taken a clear public stance, advocating for such a ban in an open letter eight years ago with thousands of signatories, including myself.

These areas provide just a sampling of moral perils of blind implementation of AI system. They make clear that it is now imperative and overdue that non-specialists take a role towards their regulation. As an insider in this field, I can see no reason to believe that self-regulation of this industry will be any more effective than most others to date; simply speaking, conflicts of interest always get in the way once markets develop. The self-regulation of gene-splicing biologists happened well before commercialization of their technology began; with AI, the horse is already out of the barn. Technology provides us with new tools and new capabilities but has little to say about the morality of what that technology is used for. Most technology is designed to solve some real problem, but its use for many other applications is little considered. The consequences of AI are now becoming clear; it is imperative that we individually and collectively take a clear stand on what uses of this technology will be allowed in society and what potential uses are ethically unacceptable.

[i] Mathematically, this kind of doubling leads theoretically to an exponential speedup that approaches infinite speedups happening instantaneously, a very strange event that mathematicians call a “singularity.”

[ii] For more, see Nancy Kanwisher, “Functional specificity in the human brain: A window into the functional architecture of the mind,” Proceedings of the National Academy of Science, 2010.

[iii] [For more on herem hayishuv, see David Teutsch, A Guide to Jewish Practice, Vol. 1, pp 367-368.

[iv] Daniel Nevins et al, “Halakhic Responses to Artificial Intelligence and Autonomous Machines,” CJLS Responsum 2019, p. 41.